Shutong Wu

PhD Student in Computer Science

I'm a second-year PhD student at the University of Rochester, advised by Prof. Zhen Bai in the Interplay Lab. My research focuses on Mixed Reality and 3D Generation, with particular interest in how we can build systems to facilitate interactive and immersive scene generation, and how users perceive such experiences.

Recent Updates

-

Dec 2025Volunteered at SIGGRAPH and SIGGRAPH Asia 2025!

-

Oct 2025Unity-MCP is accepted at Siggraph Asia 2025 Technical Communication Track.

-

Jul 2025Maintainer and core contributor to the open-source repo Unity-MCP. GitHub

-

May 2025AGen received Best LBW Paper Nomination at AIED 2025. Paper

-

Sep 2024Started PhD at University of Rochester, advised by Prof. Zhen Bai in Interplay Lab.

Research Interests

Mixed Reality

Exploring immersive AR/VR experiences, interaction techniques, and novel applications that enhance user engagement and productivity in mixed reality environments.

3D Generation

Investigating generative AI approaches for 3D content creation, with focus on enabling users to create and manipulate 3D worlds intuitively.

Human-Computer Interaction

Understanding how users interact with complex 3D systems, evaluating user experiences, and designing interfaces that facilitate creative workflows.

Analogy & Analogical Learning

Investigating how people generate and understand analogies, leveraging analogical reasoning to develop innovative educational tools and exploring novel computational approaches to analogical thinking.

Publications

MCP-Unity: Protocol-Driven Framework for Interactive 3D Authoring

The technical report for the Unity-MCP Project, including how we design, implement, and evaluate the repository for 3D authoring within the Unity editor.

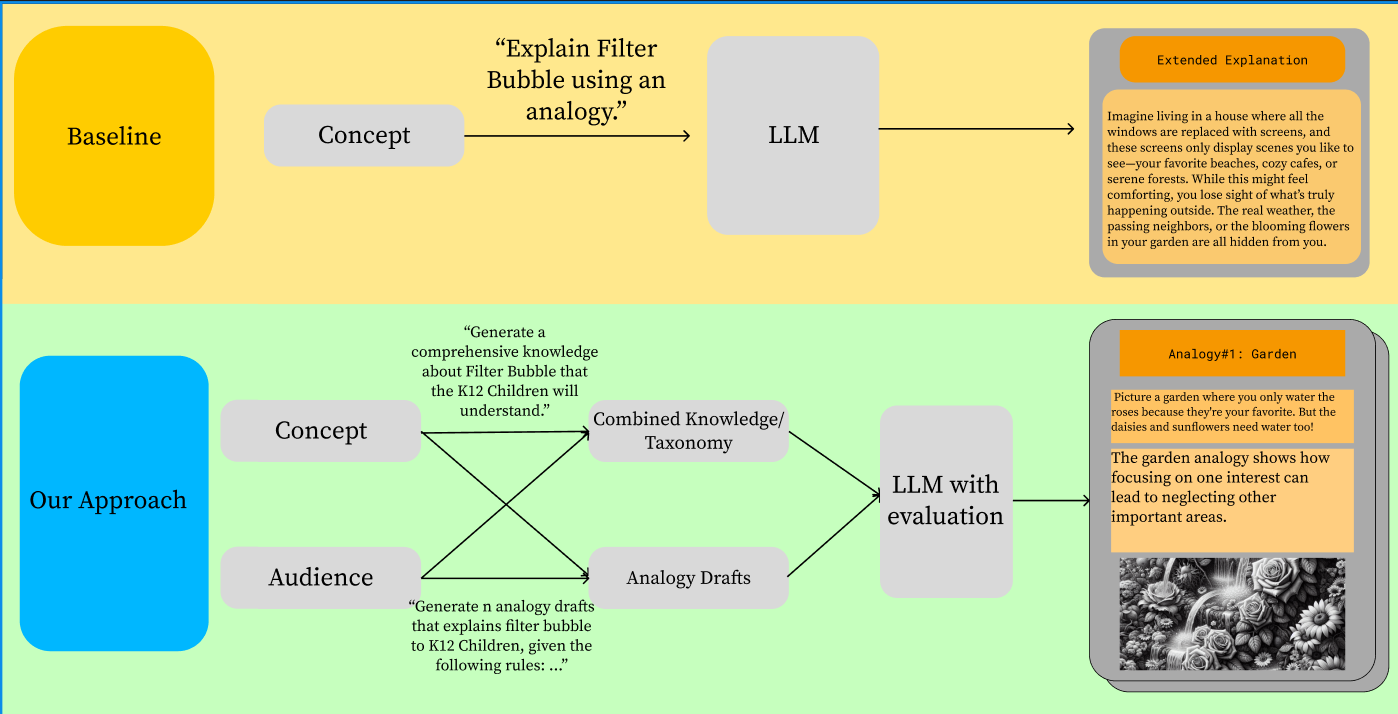

AGen: Personalized Analogy Generation through LLM

An analogy generation platform that creates tailored analogies based on user profiles and education levels, enhancing personalized learning experiences.

Current Projects

Unity-MCP

Maintainer and core contributor to the Unity-MCP project. Some highlights include: CLI feature, Shader/VFX/Material/Texture support, and runtime compilation.

VR-MCP (In Development)

Extending MCP capabilities to VR environments, enabling seamless integration between AI language models and virtual reality development and interaction paradigms.

Diminished Reality for Enhanced Focus

An adaptive Mixed Reality technique that leverages semantic understanding to remove daily objects and improve user focus and productivity in AR environments.

Past Projects

VR Vision Testing (Penn Medicine)

Designed and implemented VR vision tests on Quest 2 platform, providing accessible virtual alternatives to physical vision tests for low-vision patients. Secured two patents for novel VR-based vision testing methodologies.

Side Projects

ShaderWasp

A promptable GLSL/HLSL/Slang shader playground that generates real-time visuals in the browser, inspired by shader lab workflows.

PointWisp (3DGS / PLY Viewer)

A lightweight, static-web PLY and 3DGS viewer/editor/exporter.

Literature Seekers

My personal tool for retrieving papers quickly and finding relevant literature for new research ideas. Currently supports keyword-based search and semantic search, from semantic scholar, arxiv, and github.

Experience

University of Rochester

- Research focus on Mixed Reality, 3D Generation, and Human-Computer Interaction

- Advised by Prof. Zhen Bai in the Interplay Lab

- GPA: 4.0/4.0

- Teaching Assistant for AR/VR Interaction, Intro to AI, and Computer Algorithms

Penn Medicine Ophthalmology

- Designed and implemented VR vision tests on Quest 2 platform for low-vision patients

- Secured two patents for novel VR-based vision testing methodologies

- Developed end-to-end VR software solution within 9 months

- Utilized Unity XR, custom shaders, and post-processing techniques

Penn CG Lab

- Collaborated with Prof. Lingjie Liu on NeRF-based research project

- Created Unity animation infrastructure in C# for volumetric scene generation

- Developed C++ plugins to convert SMPL files to FBX animations

ByteDance

- Developed efficiency tools including Overdraw and Mipmap Collector

- Reduced average frame time by 10ms through graphics optimization

- Collaborated with game studios on performance analysis and optimization

NetEase Games

- Developed mobile game features and mechanics

- Optimized game performance and user experience

- Collaborated with cross-functional teams on game development projects

Technical Skills

Programming Languages

Graphics & VR

AI & ML

Tools & Frameworks

Contact

I'm always interested in discussing research collaborations, new opportunities, or just connecting with fellow researchers in AR/VR and 3D generation.

Office: Wegmans Hall, University of Rochester, Rochester, NY

Lab: Interplay Lab